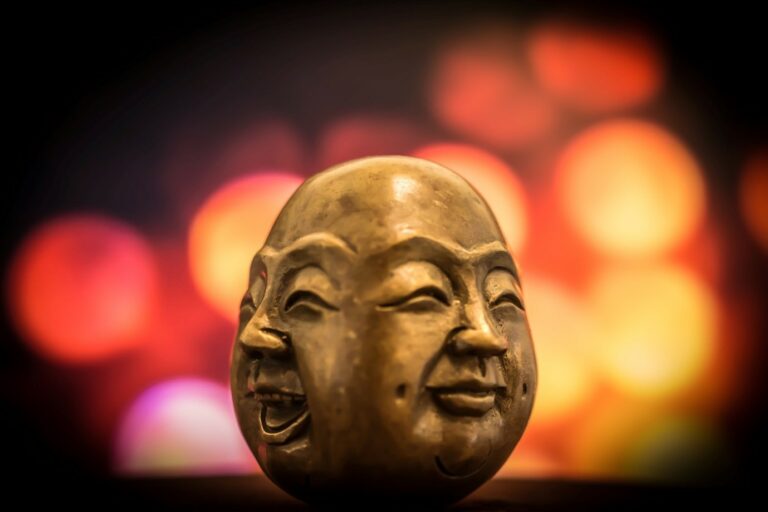

We have all heard that you never get a second chance at a first impression, but what if an AI was making that judgement? Facial emotion recognition (FER) – is the use of AI software to analyse certain qualities in a human facial expression, then based on certain identifiers, the AI automatically detects your emotional state. FER works by using algorithms based on human facial coders and millions of human faces to determine and detect facial coding units such as lip curl, eyebrow raise and brow furrow. By putting together these facial coding units, the AI then provides probability values to assess the likelihood that you are feeling a certain emotion. Present FER technologies have been able to analyse faces based on eight classified emotions: Anger, Contempt, Disgust, Fear, Happiness, Neutral, Sadness, and Surprise. Humans display many more than eight emotions and use more than just their faces to express them. As FER enters the tech space, the main critiques question its accuracy and its capability of complying with data protection principles of fairness and transparency.

FER Uses

Even with FER’s critiques, this technology could have useful applications across many industries such as healthcare, automotive and security. In healthcare, it could be used to predict depression or psychotic disorders, help identify someone in need of assistance, or observe a patient during treatment. Another example of FER’s benefits is detecting driver fatigue which could prevent accidents. However, even with these benefits to date, much restraint should be exercised in using this technology prematurely as it could result in devastating consequences for individuals. WeSee offers their FER technology to security and law enforcement, so that those with suspicious micro-expressions and eye movements can be clearly identified. WeSee also offers to assist with claims fraud as they provide assessors with insight by detecting the facial cues of a claimant via app or video. In the financial sector, an unnamed firm wanted to use AI to determine to whom they should lend money, even though it was noted that the AI would not be free of bias surrounding race and gender.

This technology is also used in human resources. Candidates interviewing for a job may be required to answer questions in a recorded video and with FER technology analysing that video, could determine that candidate’s fate based on FER’s first impression.

FER being misused or mistaken in these situations could result in damaging and devastating consequences. It may mistakenly view a job candidate as angry or disgusted, in which case that candidate will be eliminated. Or, more seriously, an individual could be wrongfully targeted and detained.

Accuracy

FER technology combines biometric data, facial recognition and artificial intelligence technologies to create a data privacy nightmare. Human expression and emotion are considered an individual’s most private data; after all, emotions define how we interact with others and express our feelings. With the current FER technologies available, it is almost impossible to imagine how it would be compatible with UK GDPR principles. Beginning with accuracy, FER currently registering only eight emotions cannot possibly capture every human emotion. The face can convey many different emotions or a mix of simultaneous emotions at any given time that go well beyond the eight emotions. An indication of true happiness is a Duchenne smile, when your cheeks raise and your smile reaches your eyes but, humans are also capable of a fake smile or politely smiling on demand. In all of these examples you are smiling, something that FER would register as happiness, yet that is not what you are truly feeling. FER cannot register the wider context in which your emotions are displayed. Additionally, body language plays a significant part in portraying human emotion, which FER does not engage. Crossing your arms is a strong universal indicator that you are angry, but this action could be done with a sarcastic smile on your face. FER would likely analyse your expression as happiness when it is likely that your true emotion would be quite the opposite.

Furthermore, a happy or sad memory can trigger a new emotion and facial expression seemingly at random. FER cannot explain the reasoning behind an emotion so how could it possibly accurately infer a person’s emotion. These are only a few examples of the inherent flaws of FER which makes it hard to believe that this technology has been implemented at all.

Fairness

It is also important to note that FER technology presents with racial discrimination. Lauren Rhue studied a data set of professional basketball players’ faces and found racial disparities resulting in black men being more likely assigned negative emotions. The study suggests that this happens in two ways, first that the AI is consistently presenting a bias when analysing black faces and assigning a negative emotion. Secondly, AIs could be interpreting ambiguous facial expressions more negatively for black faces.[1] Regardless of why FER inferences more negative emotions to persons of African descent, it is clear that this should be a major deterrent for using this technology. With this information, it is terrifying to think that in the not so distant future, this technology could be used in the criminal justice system. Police forces are already attempting to use facial recognition technology,[2] FER’s implementation, without solving its racial bias, could only mean more discrimination and racial profiling leading to more false arrests and unjust detentions.

Transparency

Courtesy of surveillance, mobile phones and social media, facial expressions can be captured anywhere. This leads to difficulties for transparency for the collection and processing of data. There are also particular challenges with remote capture. These factors make it extremely difficult to adequately inform an individual of all the systems or applications that will process their data. Even if consent were obtained, informing data subjects as to how their data will be aggregated or where it could be going would be a massive challenge. Without sufficient transparency, data subjects could be left vulnerable and exposed.

Conclusion

FER technologies may seem to be beneficial for companies and industries but for individuals there is too much at stake. Microsoft and Google, very high-profile tech giants, have restricted emotion recognition technologies, with Microsoft removing its FER technology via Azure. If these large tech companies are limiting their use of this kind of technology, this should be a sign that this technology is under-developed and dangerous. It is nearly impossible to see how this technology could be GDPR compliant as it currently stands. It violates the core principles of accuracy and fairness with full transparency nearly unachievable. Ultimately, facial expressions alone cannot accurately detect individuals’ emotions and using FER prematurely could infringe on individuals’ right to privacy.

[1] Rhue, L. (2018). Racial Influence on Automated Perceptions of Emotions. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3281765

[2] https://www.libertyhumanrights.org.uk/issue/what-is-police-facial-recognition-and-how-do-we-stop-it/